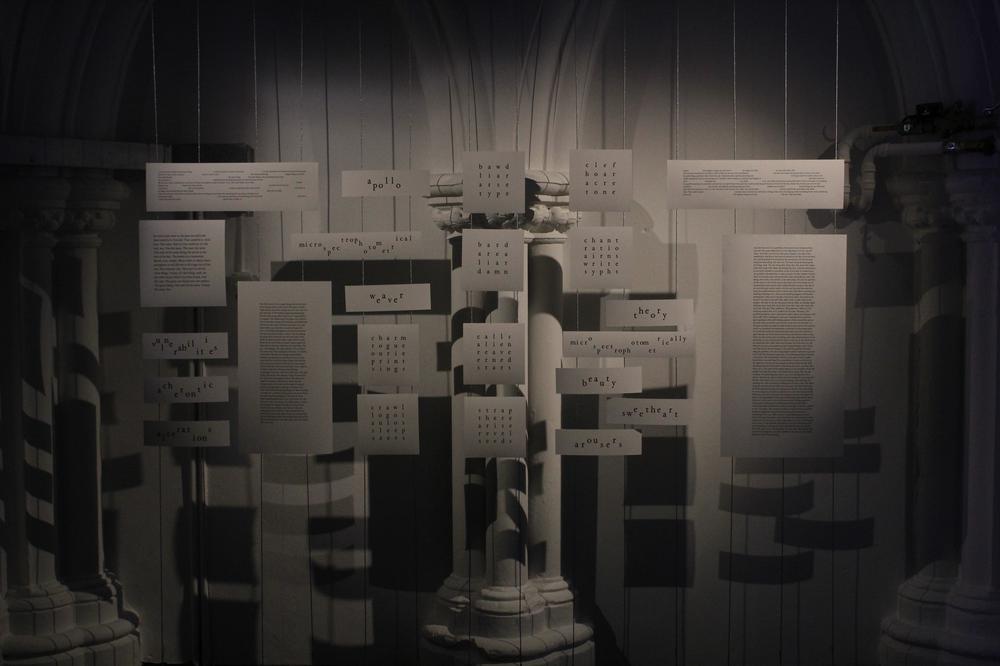

Recursus is a triptych of computational literature composed of WordSquares, Subwords and AIT, three modules using recursion and neural text generation. More information on the About page.

All posts follow hereafter. Category-based perusal is also possible:

-

The exhibition

-

Writing under computation

Recursus is a bit of a monster. Two of its arms, WordSquares and Subwords, are quite similar in scope and nature. Yet including only those would not have been satisfactory. Rather early on in my Oulipo-inspired research into words and letter constraints I felt I was hitting a wall, albeit perhaps an internal one. The conclusion that my approach using these constraints is not free enough. It does foster practice, which had been somewhat flailing beforehand, but it corners me into finding word combinations, extremely constrained lexical clusters, the evocative powers of which I am then set to maximize. This leads to asyntactic poetics, where the poetic, literary space exists in the combined meanings of the words, without almost any regards to their arrangement. This works to some extent, and I manage to dream these spaces, if the words are well-chosen enough. However the drive, the urge for syntax, for more than a crafted complex of words, remains, and often I find myself trying to find primitive sentences, or phrases, within the squares or subword decompositions I am examining. AIT is the missing piece, the fledgling link to a restored sense of linearity and development. On its own, it would have been computationally much weaker. Despite my efforts in gathering knowledge of machine learning, and deep learning in particular, reaching a sense of mastery and ease when developing a creative framework remains elusive. From my experience, this is the typical symptom of a phase of assimilation. Seemingly no light at the end of the tunnel, despite active boring, until unexpected advances and familiarity kick in, and code, like writing perhaps, comes to the fingers. The creative interaction with the product of neural text generation is also closer to literary practice as commonly understood, which is an alleyway I am set to explore in the future. Recursus, thus, as its name suggests, is both a return (to literary practice: writing, editing, imagination with constraints) as well as a step forward, the transition from a more straightforward, perhaps classical approach of computational literature (where the element of computation might have an overbearing importance on the finished pieces), to a less defined, but hopefully more fertile ground of symbiosis between the writing and the machine.

-

Typo

-

Turd/Tush/Wuss

-

Swarm yogee porns elite synes

-

Strap there arise

-

Strag

-

Shy pee art

-

Sacra iller loons enate semes

-

Grasp romeo enorm enure seres

-

Flaws robot afore stoln synds

-

Draft ratio unmew meant sends

-

Damp area mean pang

-

Crawl logoi

-

Cold hair arse type

-

Clept homer orate writs sylis

-

Clef

-

Clasp lover alane slits sylis

-

Chats/Chants

-

Chasm/Charm

-

Cases urine reede eager deeds

-

Caper array reign ended seers

-

Calls alien reave erned stars

-

Boys riot ally dyke

-

Bomb liar ulna eyed

-

Bawd/Bard

-

Barm oleo tsar hope

-

Arts best

-

Wastes accent scarce terror

-

Tastes accent scarce terror

-

Naples arrest pretty lethal estate styles

-

Lesbos escort scotia bother orient starts

-

Leader entire athena diesel ernest realty

-

Kisses intent stance sensor

-

Estate slaves talent avenue tenure esteem

-

Bricks regret ignore crowne kernel steele

-

Assess scenic sender endure sierra scream

-

While humor image logic erect

-

Games alert metro error storm

-

Basil/Bacon

-

Atlas whore aroma reset deeds

-

Squares Pop-up II

More 3-squares generated for the pop-up exhibition in May, but not exhibited.

-

Squares Pop-up

The batch of 3-squares presented at a pop-up exhibition in May this year.

-

I am still doing this

-

Lack impotence my entire life

-

The self-torture

-

I am the new ones

-

The world into some kind of curse

-

The hot-&-subtlety

-

The tension

-

I am the most in desire

-

Insane

-

Not the problem not at all

-

In the end of the world

-

The conclusion is the trivial thing

-

The fucking half-ugly thing

-

Freeliven die despatially

-

An art of the positive

-

The best of the old

-

You think you can get

-

The religion of course

-

So maybe something

-

I did not really come

-

I can feel it

-

I am this way

-

The old mind

-

So weak resist

-

Cython

The WordSquares conundrum did make me think about speed and optimisation. Friends in the field do confirm that C++ (& C), Java, and in general compiled (instead of interpreted ones like Python) are much faster. The Cython library has been built to provide Python with C-like speed capabilities (by adding strong typing and, if you so desire, integrate loops into the C compiler, which greatly improve efficiency). Definitely a project for the future!

-

Wordsquares Mining

This is proving to be more difficult than expected.

-

Cloud Computing

For the first time in years, I feel the computational resources I have access to are not sufficient to achieve my goals.

-

Flared led far

-

Divine/Divide

-

Denude/Denied

-

Cunts

-

Atheist/Monist

-

Aspire sir ape

-

Arses

-

Apollo

-

Unsensationalistically

-

Undernationalised

-

Tautologies

-

Superrespectableness

-

Selfidentification

-

Representativeness

-

Radiotherapeutists

-

Over

-

Obstructionistical

-

Ness(es)

-

Deterritorialisation

-

Bitch

-

Autoradiographically

-

Anti

-

Anthropometrically

-

Administrationists

-

Microspectrophotometrical(ly)

-

Ethylmercurithiosalicylates

-

More Sources

My experience in the UK has led me to two odd conclusions: first, I tend often to be inspired by ‘continental’ art (from France, Germany, Austria, Italy…) more directly, intimately, profoundly, by what I find in the UK (the case of the US is less clear); the second strange phenomenon is the near-absence, in London, of any transmission, any visibility, of so much I find inspiring in Continental Europe (more so for France than Germany). As my discovery of digital practices occurred after my exile in the UK, I can safely assume that what I see as a relative ‘absence’ of such practices on the Continent is due to my ignorance, and the already mentioned lack of proper visibility of these artists here, rather than the rather broadly shared prejudice that the UK is far ahead on this front, and that these practices are yet to emerge over there.

-

Max Woolf RNN

Thanks to Sabrina Recoule Quang, also in the course, I discovered Max Woolf’s repo for neural text generation (using LSTMs). The project in built in Keras/TensorFlow. This will certainly be a stepping stone for my study of these networks and text generatio in general. The goal is clear: make experiments generating texts, but also, hopefully soon, pick apart the code and gradually acquire the skills to build such networks myself.

-

Mital's CADL

After weeks of stagnation at the end of session 2 of Parag Mital’s course on Kadenze, some progress, but only until half of session 4, dealing with the nitty-gritty of Variational Autoencoders… Steep, but after setting myself to copy every single line of code in these Jupyter Notebooks, the whole verbosity of TensorFlow becomes a little less alien.

-

TFLearn

Slow, and rather painstaking advances in Parag Mital’s course on Kadenze and associated repo… TensorFlow really is verbose. I imagine one must be happy to have all these options when mastering the whole thing, but it does make the learning process more difficult. Another thing that comes to mind: as often happens, visual arts and music take the lion’s share in the computational arts business, and unsurprisingly Mital’s course focuses on that (although I noticed that there is a textual model hidden deep in the repo, to be studied in due course).

-

Deep-speare

One more (usual?) attempt at making a network write Shakespeare sonnets. As usual, the results are semi-sensical (and not poetically overwhelming). Upside: they got the pentameters right!

-

Worldy/Wording

-

Weaver war eve

-

Vulnerabilities uni veil labs rite

-

Vagina

-

Timeless tile mess

-

Threats the rats

-

Theory/Ethics

-

Sweetheart ether sweat

-

Sin

-

Shadow how sad

-

Parrot pro art

-

Missionaries isis mine soar

-

Forgotten rotten fog

-

Explained expand lie

-

Closures loss cure

-

Beauty eat buy

-

Alterations

-

Xavier Initialization & Vanishing Gradients

A technical issue I came across when researching neural networks, and especially recurrent neural networks and LSTMs, where researchers encountered a phenomenon known as the ‘exploding or vanishing gradient problem’ (in fact one of the causes of the invention of the LSTM architecture): when you try to make your network remember information from before, e.g. information from earlier in a text or other sequences, that information is stored as numbers and added/multiplied to the current state. In that process, what often happens is either that values become very large or very small (in fact, in the first RNNs older information had the tendency of weighing very little as compared to recent one, which is why the LSTM, long short term memory, network, was invented).

-

Webscraping

Quick dreams of scraping.

-

Le Karpathy

Some more resources by Andrej Karpathy, already mentioned in Neural Steps:

-

Some Sources

A collection of some sources / people I came across who use computation for textual ends.

-

Word2Vec

Word2vec is a set of models used to produce word embeddings, that is, the creation of a high dimensional vector space (usually hundreds of dimensions) in which each individual word is a vector (or a coordinate). This space is designed to have the property that words occurring in similar contexts in the source corpora appear close to each other in the space. Not only do these spaces allow for similar words to appear in clusters, but the algorithm preserves certain semantic relationships in a remarkable fashion (e.g. the vector that leads to a singular to a plural form of a noun remains stable throughout the space, as does the masculine/feminine versions of entities, etc.).

-

Wordsquares Update

Various updates on the Wordsquares front.

-

Subwords

Overhaul of a former (sketch of a) project, Subwords, now ported into Python.

-

L'Art introuvable

Racking sense of failure. All this work (seemingly) for nothing. As if the only way I could do anything was through a slightly obsessional drowning into technical issues, such as recursive functions or the (already difficult) basics of machine learning…

-

Multiprocessing

Important discovery: it is possible to split tasks so that separate cores can work on them independently. I happen to have four on my machine, which is already a substantial speed increase.

-

LSTMs & Sequentialia

Resources for steps into what is likely to matter most: networks working with linear sequences, e.g. text.

-

DeepSpeak

An idea is taking shape: transposing DeepDream to text (see this page for a break-down of what happens in deep neural nets, the foundation for DeepDream images).

-

More NN Steps

Back into the neural foundry. Found this repo, which is a fine, simple piece of help to accompany Mital’s tougher tutorials (still at session 3 unfortunately, on autoencoders).

-

TensorBoard

For future reference, a video of introduction to TensorBoard, a tool to visualise the inner workings of a TensorFlow network. Could come in handy if I succeed in hopping onto the machine learning bandwagon.

-

Datashader (plotting big data)

Whilst working on visualisations for my WordSquares project, I encountered problems due to the size of the dataset I wanted to plot. Digging into the Bokeh library I found out about the Datashader library that specialises in large datasets (e.g. billions of points).

-

WordSquares Redux

Finally ported my ofxWordSquares to Python, leading to an improved wordsquare generator. The progress has been fairly gratifying, especially the implementation of a Dawg (directed a cyclic graph) for prefix search.

-

The Vi-rus

Started learning Vim. Difficult to explain where that comes from. I think I like the command-based approach and the abstraction. As usual, thereis is the lure of ‘productivity’, hailed by Vim defenders as the new (in fact quite old) dawn of text processing. I can imagine now the sort of improvements one can get out of it, especially once one has stepped into the milky bath of macros. Soon enough. For now, still pretty much a battle, as I tend to want to learn all the commands in one go, without letting myself get into all that chunk by chunk.

-

NN Steps

Steps toward neural networks. Slow steps. I have been working on completing Parag Mital’s Creative Applications of Deep Learning with TensorFlow, but there hardship ahead. The first two lessons were rather heavy, especially as it required adaptation to Python as well as a cohort of various libraries and utilities such as NumPy, SciPy, MatPlotLib, and a not-so-smooth recap on matrices and other mathematical beasts.

-

JavaScript Temptations (RiTa)

A clear temptation for the future: learn JavaScript properly and work with the browser. Various causes to this idea, and one I would like to stress now is the presence of the RiTa Library, a set of tools for text analysis and generation in JavaScript, which could be an excellent excuse for:

- Getting into JS in the first place;

- Move toward a routine based on small incremental projects;

- A nice way of practicing my NLP tools (alongside NLTK in Python).

-

Increments vs Shifts

After our collective session on Wednesday, and Saskia’s remarks about incremental practice, a few thoughts came to mind: first, it is quite difficult, in the current situation, to produce small works or prototypes given the knowledge I have (that is, the knowledge of Machine Learning, and especially Deep Learning), or at least so my brain says. I have come to doubt the efficiency of my approach, which until now has mostly been to work with relatively large chunks of knowledge and equally large projects, slow but deep, that I can only handle one at a time, etc. If I were to continue with this methodology for my final project, that would mean continuing to study Parag Mital’s course on Tensorflow, then find other resources for Machine/Deep Learning used to generate text, and only then start playing/producing bits of work.

-

Singular Value Decomposition

Several machine learning resources I looked at (before overwhelmment took me).

-

Vector Resolution

How about seeing the size of the feature vector as ‘resolution’, in the same way as the number of pixels used to represent an image? The more you have, the closer you get to human perception (e.g. x amount of millions of pixels for photographic accuracy).

-

Tasks

Steps into Parag Mital’s course ‘Creative Applications of Deep Learning in TensorFlow’ on Kadenze. Very slow. A majority of the time is dedicated to learning the tools used in order to get into deep learning & neural networks, namely Python itself (although that has become rather natural), and specific libraries such as NumPy, for many mathematical operations, and MatPlotLib for plotting and statistical graphs. On top of that, I need to refresh my knowledge of matrices, the obvious building block of so much machine learning today. A good YouTube channel for quick recaps of linear algebra, 3Blue1Brown. As usual with a lot of creative coding, I will have to deal with the fact that most resources are dedicated to image processing (even if Natural Language Processing is also an important field). Often this does feel like a waste of time, especially as the mathematical operations are different, but most of what I learn will be necessary anyway. A major issue is to deal with the sense of slowness I get from these learning sessions, where even the simplest concepts and manipulations often require me to ponder, pause and rest for far longer than I would wish.

-

First Thoughts

The project will be centred around text:

- inventing textual constraints and using computation to produce texts;

- using computation and machine learning to explore the resulting space of possibilities;

- expand my knowledge of machine learning and other tools in the process. The texts are mostly going to be ‘poems’, or textual fragments, but the idea of producing prose, or simply longer textual objects, is one of my goals.